Implementing Sidecar Design Pattern with Kubernetes Pod

Build a Git Workflow with Sidecar

Sidecar pattern is a design approach in which the application responsibilities are segregated into a separate process. Basically, this pattern allows us to add more capabilities to an application without addition of third-party components.

In today’s post, we will look at the following topics:

- What is the Sidecar Pattern?

- Applications of Sidecar Pattern

- Implementing Sidecar Pattern with a Kubernetes Deployment

So, without wasting any time, let’s start with the first point.

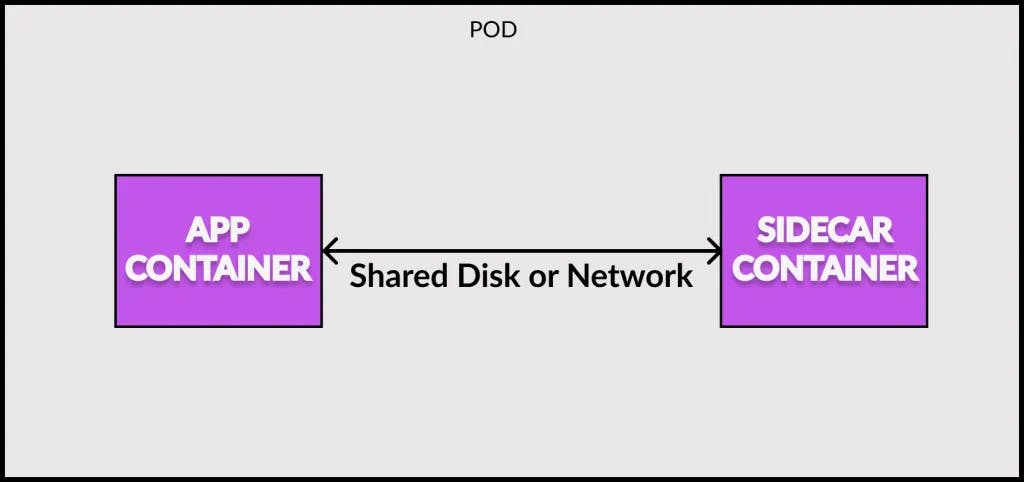

1 - Sidecar Pattern in Distributed Systems

In the context of distributed systems, the sidecar pattern is a single node pattern. The pattern is made up of two containers:

- Application Container

- Sidecar Container

The application container contains the core logic for the application. On the other hand, the role of the sidecar container is to augment and improve the application container. Moreover, this improvement is often without the application container’s explicit knowledge.

Basically, we can use a sidecar container to add functionality to the application container without making significant changes to the application itself.

On an implementation level, sidecar containers are usually co-scheduled to the same machine by using a container group such as the Kubernetes Pod API. Typically, the application container and the sidecar container share a number of resources such as:

Parts of the filesystem

Hostname and network

Namespaces

The below illustration depicts the concept of a sidecar pattern:

2 - Applications of Sidecar Pattern

There are several applications of a sidecar pattern. Let us look at a few of the most common use-cases:

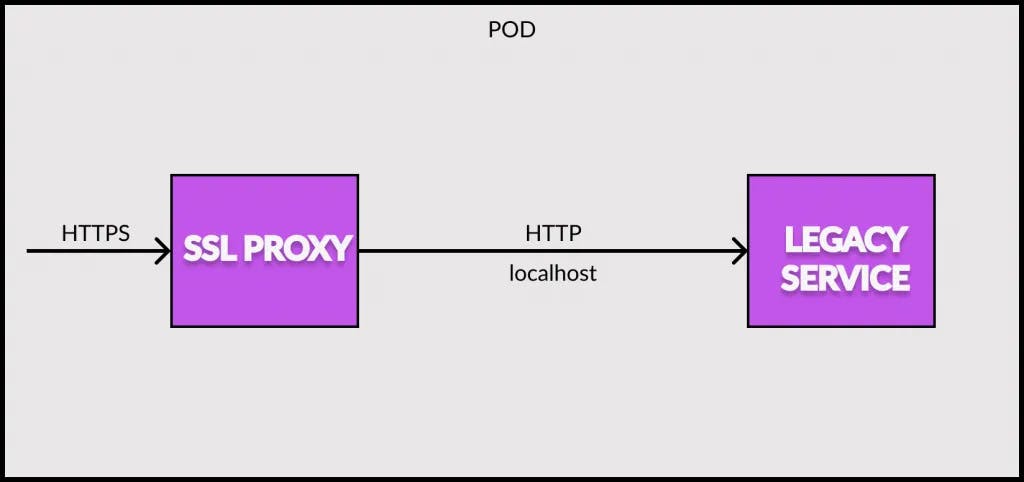

2.1 - Adding HTTPS to Legacy Application Service

This is one of the most common uses of a sidecar pattern. In this use-case, we may have a legacy application service serving requests over unencrypted HTTP and due to some security requirements, we want the service to use HTTPS going forward.

One approach would be to modify the application source code to use HTTPS followed by rebuilding and redeploying the application. This might be an easy task for a single application.

But what if there are several services that need to be modified?

The sidecar pattern can help in this case.

See below illustration that showcases the sidecar approach to solve the above requirement.

In this case, we configure the legacy service to serve exclusively on localhost. This means that only services that share the local network can access the service directly.

Next, we use the sidecar pattern to add a nginx sidecar container to act as a SSL proxy. The nginx container lives in the same network namespace as the legacy service. Therefore, it can access the legacy service running on localhost. Also, the nginx sidecar can terminate the HTTPS traffic on the external IP address of the pod.

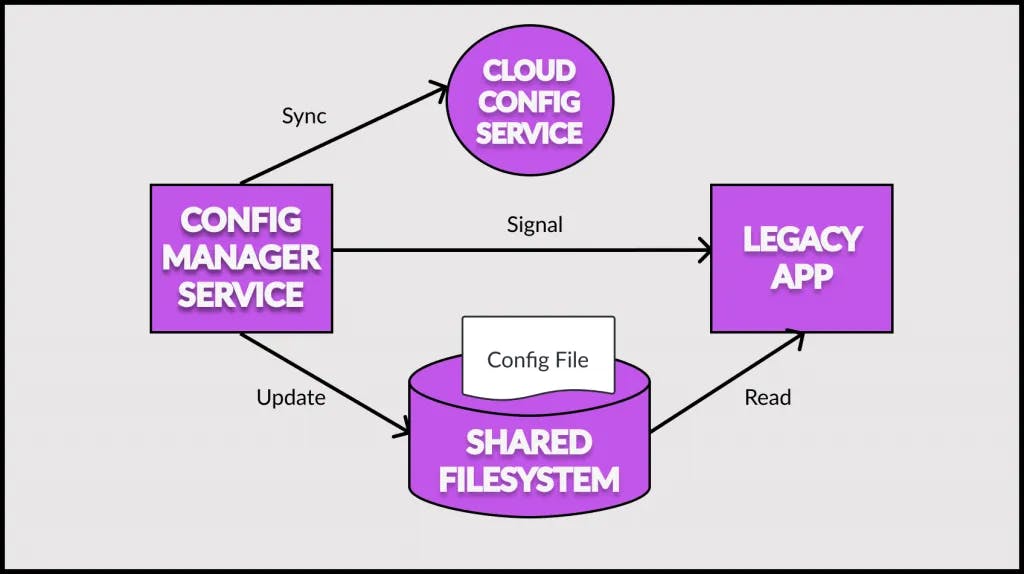

2.2 - Supporting Dynamic Configuration with Sidecar

Another common use of the sidecar pattern is to support dynamic configuration.

Most applications use some sort of configuration file for parameterizing the application. This configuration file can be in a text format or XML, JSON and YAML.

Most existing applications are written in a way to expect the configuration file to be present on the filesystem. Modifying a configuration means changing the contents of the file on the filesystem. However, in modern cloud environments, the usual practice is to update configuration parameters using APIs. This makes the configuration information dynamic.

But how to handle this scenario for legacy applications that rely on their filesystem for configuration?

Modifying the applications is one option but it is usually time consuming and costly. The other option is to leverage the sidecar pattern for managing the configurations.

Check out the below illustration:

As you can see, in the above example, we have two containers – the container for serving the application and the container for configuration management. Both containers are part of the same pod and share a directory between themselves. The application configuration file is maintained within this shared directory.

The legacy application loads the configuration from the filesystem as it has been programmed to do. However, when the configuration manager starts, it performs a few important steps:

- Checks the configuration API hosted in the cloud.

- In case there are differences in configuration between the cloud version and the shared filesystem, it downloads the cloud configuration to the shared filesystem

- Finally, it signals the legacy application to reconfigure itself. This can be done by the configuration manager service sending a signal (SIGHUP or SIGKILL) to the legacy application.

2.3 – Building Modular App Containers

While supporting legacy applications with new features is a great application for the sidecar pattern, we can also use this pattern for improving our system’s modularity and reusability.

For example, any real world application has functionality related to debugging, logging and monitoring. In such cases, we can build these functionalities as separate components with platform agnostic interfaces such as REST APIs.

These modular and reusable components can be deployed as sidecars. The containers share the process id with the application container but are essentially separate from the main application container.

Of course, this modular approach tends to move towards more standardization and less tailored to the specifics of the application. As with most things in software development, there are trade-offs that should be considered before making the decision.

3 – Sidecar Design Pattern with Kubernetes Pod Example

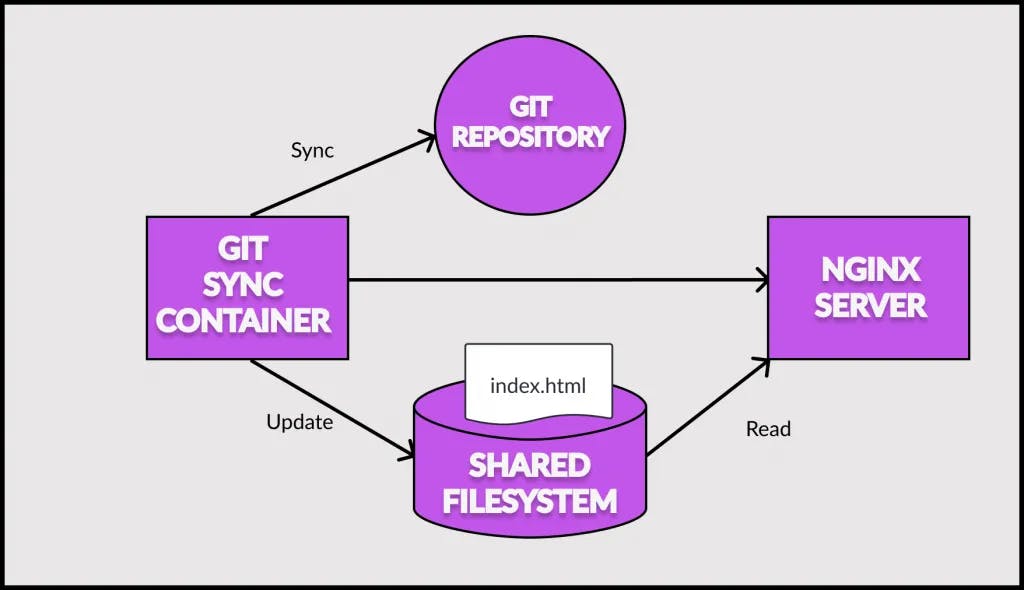

Now that we have understood the basics of sidecar pattern along with various applications of the pattern, it is time to look at a demo.

In this demo, we will use the sidecar demo to implement a basic git workflow of deploying new code to running service. Check out the below illustration of our demo:

Let us understand what is going on here:

- The Git sync container and the Nginx server container are part of the same Kubernetes pod

- The Nginx server serves the

index.htmlfile saved in the shared filesystem between the two containers - On the other hand, the Git sync container polls a Git repository where we have the latest copy of the

index.htmlfile. It fetches the latest version of the file and updates the same in the shared filesystem - Whenever we push changes to our Git repository, the updated file makes it way to the shared filesystem and is served by the Nginx server.

We can now try to implement the above workflow in a step-by-step manner.

3.1 – Deploying the Nginx Container

In the first step, let us deploy the Nginx container on a Kubernetes pod.

Below is a very basic index.html file.

<html>

<body bgcolor="#FFFFFF">

<h1><strong>This is sidecar pattern commit once more!</strong></h1>

</body>

</html>

Next, we create a Dockerfile for the nginx container.

FROM nginx

ADD index.html /usr/share/nginx/html/index.html

Basically, all we are doing is pulling the official nginx image and copying the index.html to the nginx file system. We can build the image using the below Docker command.

$ docker build -t progressivecoder/nginx .

Next, we create the Kubernetes pod yaml (deployment.yaml) file for deploying the image.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: progressivecoder/nginx

name: nginx

imagePullPolicy: Never

ports:

- containerPort: 80

Lastly, we have the Kubernetes service (service.yaml) to expose the nginx deployment outside the cluster.

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx

name: nginx

spec:

ports:

- name: 80-80

port: 8080

protocol: TCP

targetPort: 80

selector:

app: nginx

type: NodePort

Note that we are using service type as NodePort.

Finally, we can apply these templates to the Kubernetes cluster using the below commands:

$ kubectl apply -f deployment.yaml

$ kubectl apply -f service.yaml

3.2 – Deploying the Git Synchronization Container

Now, we can deploy the Git synchronization container as part of the same pod. This is basically our sidecar container.

First, we create a shell script (page-refresher.sh) that polls a particular source file every 5 seconds and copies it to the Nginx directory /usr/share/nginx/html. Note that the frequency of polling depends on your use-case.

#!/bin/bash

while :

do

echo $(date +%x_%r)

wget --no-cache -O temp.html $STATIC_SOURCE

mv temp.html html/index.html

sleep 5

done

To execute the shell script, we create a basic image using the alpine distro. Below is the dockerfile (Dockerfile-script) for the same. If you are new to Docker, you can read more about in our post on basics of Docker.

FROM alpine

WORKDIR /usr/share/nginx

ADD page-refresher.sh page-refresher.sh

ENTRYPOINT sh page-refresher.sh

To build the image, execute the below Docker build command.

$ docker build -t progressivecoder/sidecar -f Dockerfile-script .

Next, we need to update the deployment.yaml file as below for adding the sidecar container to the pod.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: progressivecoder/sidecar

name: sidecar

imagePullPolicy: Never

env:

- name: STATIC_SOURCE

value: https://raw.githubusercontent.com/dashsaurabh/sidecar-demo/master/index.html

volumeMounts:

- name: shared-data

mountPath: /usr/share/nginx/html

- image: progressivecoder/nginx

name: nginx

imagePullPolicy: Never

ports:

- containerPort: 80

volumeMounts:

- name: shared-data

mountPath: /usr/share/nginx/html/

volumes:

- name: shared-data

emptyDir: {}

Note the containers section where we now have details of two images – sidecar and nginx. Both these images share the same storage location for the index.html file. Also, the sidecar container has a special property STATIC_SOURCE. This property points to the Github URL where we can keep the updated index.html. Basically, this is the source code of the application.

We can apply the deployment changes once again using the below command. This will update the Kubernetes pod deployment.

$ kubectl apply -f deployment.yaml

Once the deployment is live, our git workflow process is complete.

To test the workflow, follow the below steps:

- Push a new change to the

index.htmlin the Github repository. - Wait for the sidecar container to pull the latest change and save it in the shared file system. The overall time depends on the polling time in the shell script plus the amount of time it takes for Github repo to reflect the changes.

- The Nginx server will start serving the updated HTML file after some time.

- Access the application using the Kubernetes cluster ip and port 80 to view the HTML page in the browser.

Conclusion

With this, we have successfully looked into Sidecar Design Pattern using Kubernetes pod example. In this post, we covered the theoretical aspects of the sidecar pattern and different use-cases. Finally, we implemented a complete Git workflow using Kubernetes pod to demonstrate the sidecar pattern.

How did you find this post? Have you used sidecar pattern in your application?

If yes, do share your thoughts on this pattern and whether you found it to be useful.