Photo by Marcin Jozwiak on Unsplash

5 Challenges in building Distributed Systems

And how to overcome these challenges?

Table of contents

Distributed systems are all the rage these days.

Whenever I visit a tech publication on the internet, I usually find a flurry of posts going on about the benefits of distributed systems. Everyone seems to be enthralled with the general idea of distributed systems and the seeming advantages they bring to the table.

While there is no harm in creating informational content that can help people learn, I find that many times distributed applications are projected as easy-to-build stuff.

The reality, however, is quite different.

Building and operating distributed systems is hard. Don't let someone tell you otherwise.

The task of creating a distributed system is fraught with challenges. Ironically, many of the challenges stem from the benefits that make these systems attractive in the first place.

You should not ignore these challenges when trying to build a distributed system. If you do so, you'd end up in a world of trouble.

However, if you embrace these challenges and factor them into your design, you can reap the true benefits of distributed systems.

Let's look at these challenges one by one.

Communication

A distributed system is distributed.

Multiple nodes. Probably separated geographically.

No distributed system can function without communication between its various nodes.

Even the seemingly simple task of browsing a website in your web browser requires a significant amount of communication between different processes.

When we visit a URL, our browser contacts the DNS to resolve the server address of the URL. Once it gets hold of the address, it sends an HTTP request to the server over the network. The server processes the request and sends back a response.

System designers should ask a few important questions regarding the communication aspects.

How are request and response messages represented over the wire?

What happens in the case of a network outage?

How to guarantee security from snooping?

We can handle many of these communication challenges by falling back on abstractions such as TCP and HTTPS.

However, abstractions can leak. For example, TCP attempts to provide a complete abstraction of the underlying unreliable network. But TCP cannot do much if the network cable is cut or overloaded. When this happens, the challenge falls back on the system designer.

Coordination

Imagine there are two generals with their respective armies. They need to coordinate with each other to attack a city at the same time. Only if they attack at the same time, they can capture the city. For that, they need to agree on the time of the attack.

Since the armies are geographically separated, the generals can communicate only by sending a messenger. Unfortunately, the messengers have to go through enemy territory and may get captured.

How can the two generals agree about the time of the attack?

One of them could propose a time to the other by sending a messenger and waiting for the response.

But what if no response arrives? Could the messenger have been captured? Could the messenger be injured and take longer than expected? Should the general send another messenger?

The problem is not trivial.

No matter how many messengers are sent, neither general can be sure that the other army will attack the city at the correct time. Sending more messengers can increase the chances of successful coordination, but the chances can never reach 100%.

In the context of distributed systems, this problem is known as the two-general problem.

Generals are like nodes in a distributed system. To make the system work, the nodes should coordinate with each other. However, a node can fail at any time due to a fault.

While starting, every developer feels they will build a fault-free system. I used to think the same way. Of course, it was a naive pursuit that was bound to fail.

You cannot build a system completely free from faults. Larger the distributed systems, the higher the probability of faults.

A fault-tolerant system can continue to operate despite the presence of one or more faults. The trick is to make the nodes in the system coordinate with each other in the presence of failures.

Scalability

Scalability often commands the greatest attention from system designers when building distributed systems.

At a basic level, scalability is a measure of a system’s performance with increasing load.

But how to measure the performance and load of a distributed system?

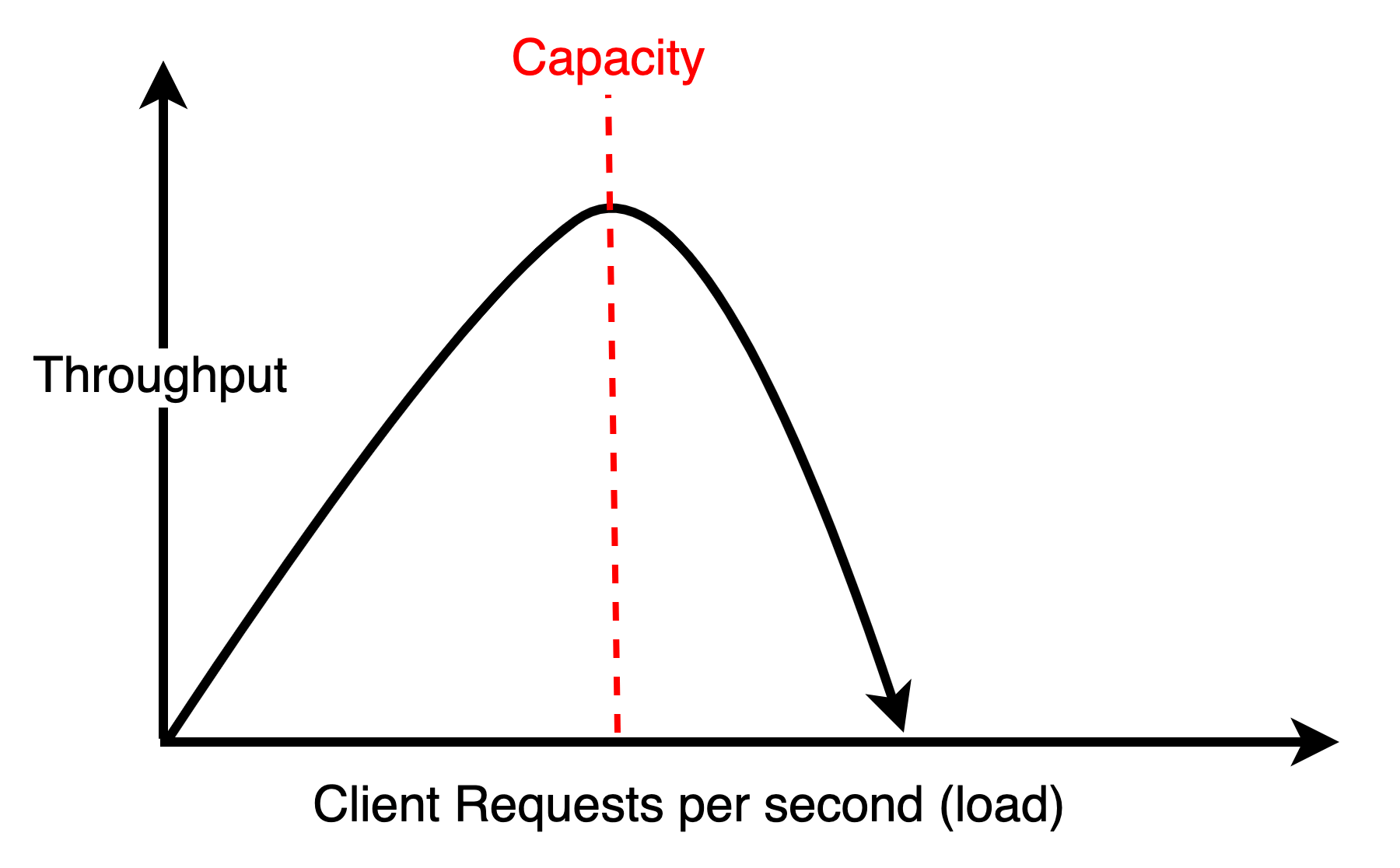

For performance, we can use two excellent parameters - throughput and response time. Throughput denotes the number of operations processed per second. Response time is the total time elapsed between a client request and response.

Measurement of system load is more specific to the system use case. For example, system load can be measured as the number of concurrent users, communication links or the ratio of writes to reads.

Performance and load are inherently tied to each other. As the load increases, it will eventually reach the system’s capacity. The capacity may depend on the physical limitations of the system such as:

The memory size or the clock cycle of the node

Bandwidth and latency of the network links

When the load reaches capacity, the system’s performance either plateaus or worsens.

If the load on the system continues to grow beyond the capacity, it will eventually hit a point where most operations fail or time out. Throughput goes down and response times rocket upwards. At this point, the system is no longer scalable. See the below graph that shows this situation.

How do we make the system scalable?

If the load exceeding capacity is the cause of performance degradation, we can make a system scalable by increasing the capacity.

A quick and easy way to increase capacity is by buying more expensive hardware with better performance metrics. This approach is known as scaling up.

Though it sounds good on paper, this approach will hit a brick wall sooner or later.

The more sustainable approach is scaling out by adding more machines to the system.

Resiliency

Failures are pretty common in a distributed system. There are several reasons:

Firstly, scaling out increases the probability of failure. Since every component in the system has an inherent probability of failing, the addition of more pieces compounds the overall chances of failure.

Second, more components mean a higher number of operations. This increases the absolute number of failures in the system.

Lastly, failures are not independent. Failure of one component increases the probability of failures in other components.

When a system is operating at scale, any failure that can happen will eventually happen.

An ideal distributed system must embrace failures and behave in a resilient manner. A system is considered resilient when it continues to do its job even when failures occur.

We can use several techniques such as redundancy and self-healing mechanisms to increase a system's resiliency.

However, this is not a zero-sum game.

No distributed system can be 100% resilient. At some point, failures are going to put a dent in the system’s availability.

Availability is an important metric. It is the amount of time the application can serve requests divided by the duration of the period measured.

Organizations often market their system’s availability in percentage terms using the concept of nines.

Three nines (99.9%) are mostly considered acceptable for a large number of systems. Anything above four nines (99.99%) is highly available. An even higher number of nines may be needed for mission-critical systems.

Here’s a handy chart that demonstrates the availability percentages in terms of actual downtime.

| AVAILABILITY % | DOWNTIME PER DAY |

| 90% (one nine) | 2.40 hours |

| 99% (two nines) | 14.40 minutes |

| 99.9% (three nines) | 1.44 minutes |

| 99.99% (four nines) | 8.64 seconds |

| 99.999% (five nines) | 864 milliseconds |

Operations

Of all the other challenges, operating a distributed system is probably the most difficult. But I have also seen the most significant innovations happening in this area.

Just like any other software system, distributed systems also need to go through the software development lifecycle of development, testing, deployment and operations.

However, gone are the days when developing a piece of software and operating it used to be done by two separate teams or departments. The complexity of distributed systems has given rise to DevOps.

Now, the same team that designs and develops a system is expected to operate it in a production environment. This creates several difficulties for the development team that they ignored earlier.

New deployments need to be rolled out continuously in a safe manner

Systems need to be observable

Alerts need to be fired when service-level objectives are at risk of being breached

There is a positive side to this change as well. There is no better way for developers to find out where a system is falling short than by providing on-call support for it.

Just make sure to get properly paid for ruining your weekends!

Conclusion

Building distributed systems is not an easy task. It is fraught with multiple challenges that revolve around the areas of communication, coordination, scalability, resiliency and operations.

But solving these challenges is rewarding. When a distributed system works as per plan, it creates a special dance of components that is wonderful to watch and admire.

Have you seen this dance? What challenges did you overcome to reach the point where your system worked according to plan?

Do share your thoughts in the comments section below.

If you enjoyed this article or found it helpful, let's connect. Please hit the Subscribe button at the top of the page to get an email notification on my latest posts.

You can also connect with me on other platforms: